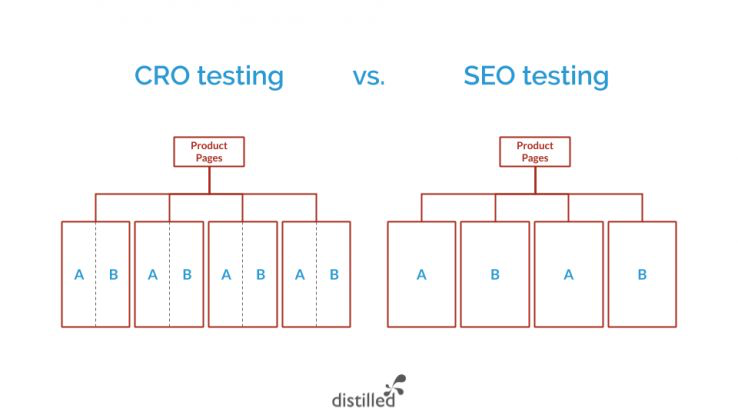

Posted by Portent This blog was written by Tim Mehta, a former Conversion Rate Optimization Strategist with Portent, Inc. Running A/B/n experiments (aka “Split Tests”) to improve your search engine rankings has been in the SEO toolkit for longer than many would think. Moz actually published an article back in 2015 broaching the subject, which is a great summary of how you can run these tests. What I want to cover here is understanding the right times to run an SEO split-test, and not how you should be running them. I run a CRO program at an agency that’s well-known for SEO. The SEO team brings me in when they are preparing to run an SEO split-test to ensure we are following best practices when it comes to experimentation. This has given me the chance to see how SEOs are currently approaching split-testing, and where we can improve upon the process. One of my biggest observations when working on these projects has been the most pressing and often overlooked question: “Should we test that?” Risks of running unnecessary SEO split-tests Below you will find a few potential risks of running an SEO split-test. You might be willing to take some of these risks, while there are others you will most definitely want to avoid. Wasted resources With on-page split-tests (not SEO split-tests), you can be much more agile and launch multiple tests per month without expending significant resources. Plus, the pre-test and post-test analyses are much easier to perform with the calculators and formulas readily available through our tools. With SEO split-testing, there’s a heavy amount of lifting that goes into planning a test out, actually setting it up, and then executing it. What you’re essentially doing is taking an existing template of similar pages on your site and splitting it up into two (or more) separate templates. This requires significant development resources and poses more risk, as you can’t simply “turn the test off” if things aren’t going well. As you probably know, once you’ve made a change to hurt your rankings, it’s a lengthy uphill battle to get them back. The pre-test analysis to anticipate how long you need to run the test to reach statistical significance is more complex and takes up a lot of time with SEO split-testing. It’s not as simple as, “Which one gets more organic traffic?” because each variation you test has unique attributes to it. For example, if you choose to split-test the product page template of half of your products versus the other half of them, the actual products in each variation can play a part in its performance. Therefore, you have to create a projection of organic traffic for each variation based on the pages that exist within it, and then compare the actual data to your projections. Inherently, using your projection as your main indicator of failure or success is dangerous, because a projection is just an educated guess and not necessarily what reality reflects. For the post-test analysis, since you’re measuring organic traffic versus a hypothesized projection, you have to look at other data points to determine success. Evan Hall, Senior SEO Strategist at Portent, explains: “Always use corroborating data. Look at relevant keyword rankings, keyword clicks, and CTR (if you trust Google Search Console). You can safely rely on GSC data if you’ve found it matches your Google Analytics numbers pretty well.” The time to plan a test, develop it on your live site, “end” the test (if needed), and analyze the test after the fact are all demanding tasks. Because of this, you need to make sure you’re running experiments with a strong hypothesis and enough differences in the variation versus the original that you will see a significant difference in performance from them. You also need to corroborate the data that would point to success, as the organic traffic versus your projection alone isn’t reliable enough to be confident in your results. Unable to scale the results There are many factors that go into your search engine rankings that are out of your hands. These lead to a robust number of outside variables that can impact your test results and lead to false positives, or false negatives. This hurts your ability to learn from the test: was it our variation’s template or another outside factor that led to the results? Unfortunately, with Google and other search engines, there’s never a definitive way to answer that question. Without validation and understanding that it was the exact changes you made that led to the results, you won’t be able to scale the winning concept to other channels or parts of the site. Although, if you are focused more on individual outcomes and not learnings, then this might not be as much of a risk for you. When to run an SEO split-test Uncertainty around keyword or query performance If your series of pages for a particular category have a wide variety of keywords/queries that users search for when looking for that topic, you can safely engage in a meta title or meta description SEO split-test. From a conversion rate perspective, having a more relevant keyword in relation to a user’s intent will generally lead to higher engagement. Although, as mentioned, most of your tests won’t be winners. For example, we have a client in the tire retail industry who shows up in the SERPs for all kinds of “tire” queries. This includes things like winter tires, seasonal tires, performance tires, etc. We hypothesized that including the more specific phrase “winter” tires instead of “tires” in our meta titles during the winter months would lead to a higher CTR and more organic traffic from the SERPs. While our results ended up being inconclusive, we learned that changing this meta title did not hurt organic traffic or CTR, which gives us a prime opportunity for a follow-up test. You can also utilize this tactic to test out a higher-volume keyword in your metadata. But this approach is also