Predictive SEO: How HubSpot Saves Traffic We Haven’t Lost Yet

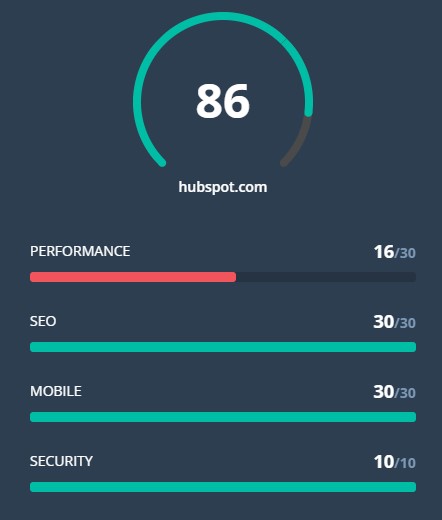

This post is a part of Made @ HubSpot, an internal thought series through which we extract lessons from experiments conducted by our very own HubSpotters. Have you ever tried to bring your clean laundry upstairs by hand, and things keep falling out of the giant blob of clothing you’re carrying? This is a lot like trying to grow organic website traffic. Your content calendar is loaded with fresh ideas, but with every web page published, an older page drops in search engine ranking. Getting SEO traffic is hard, but keeping SEO traffic is a whole other ball game. Content tends to “decay” over time due to new content created by competitors, constantly shifting search engine algorithms, or a myriad of other reasons. You’re struggling to move the whole site forward, but things keep leaking traffic where you’re not paying attention. Recently, the two of us (Alex Birkett and Braden Becker 👋) developed a way to find this traffic loss automatically, at scale, and before it even happens. The Problem With Traffic Growth At HubSpot, we grow our organic traffic by making two trips up from the laundry room instead of one. The first trip is with new content, targeting new keywords we don’t rank for yet. The second trip is with updated content, dedicating a portion of our editorial calendar to finding which content is losing the most traffic — and leads — and reinforcing it with new content and SEO-minded maneuvers that better serve certain keywords. It’s a concept we (and many marketers) have come to call “historical optimization.” But, there’s a problem with this growth strategy. As our website’s traffic grows, tracking every single page can be an unruly process. Selecting the right pages to update is even tougher. Last year, we wondered if there was a way to find blog posts whose organic traffic is merely “at risk” of declining, to diversify our update choices and perhaps make traffic more stable as our blog gets bigger. Restoring Traffic vs. Protecting Traffic Before we talk about the absurdity of trying to restore traffic we haven’t lost yet, let’s look at the benefits. When viewing the performance of one page, declining traffic is easy to spot. For most growth-minded marketers, the downward-pointing traffic trendline is hard to ignore, and there’s nothing quite as satisfying as seeing that trend recover. But all traffic recovery comes at a cost: Because you can’t know where you’re losing traffic until you’ve lost it, the time between the traffic’s decline, and its recovery, is a sacrifice of leads, demos, free users, subscribers, or some similar metric of growth that comes from your most interested visitors. You can see that visualized in the organic trend graph below, for an individual blog post. Even with traffic saved, you’ve missed out on opportunities to support your sales efforts downstream. If you had a way to find and protect (or even increase) the page’s traffic before it needs to be restored, you wouldn’t have to make the sacrifice shown in the image above. The question is: how do we do that? How to Predict Falling Traffic To our delight, we didn’t need a crystal ball to predict traffic attrition. What we did need, however, was SEO data that suggests we could see traffic go bye-bye for particular blog posts if something were to continue. (We also needed to write a script that could extract this data for the whole website — more on that in a minute.) High keyword rankings are what generate organic traffic for a website. Not only that, but the lion’s share of traffic goes to websites fortunate enough to rank on the first page. That traffic reward is all the greater for keywords that receive a particularly high number of searches per month. If a blog post were to slip off Google’s first page, for that high-volume keyword, it’s toast. Keeping in mind the relationship between keywords, keyword search volume, ranking position, and organic traffic, we knew this was where we’d see the prelude to a traffic loss. And luckily, the SEO tools at our disposal can show us that ranking slippage over time: The image above shows a table of keywords for which one single blog post is ranking. For one of those keywords, this blog post ranks in position 14 (page 1 of Google consists of positions 1-10). The red boxes show that ranking position, as well as the heavy volume of 40,000 monthly searches for this keyword. Even sadder than this article’s position-14 ranking is how it got there. As you can see in the teal trendline above, this blog post was once a high-ranking result, but consistently dropped over the next few weeks. The post’s traffic corroborated what we saw — a noticeable dip in organic page views shortly after this post dropped off of page 1 for this keyword. You can see where this is going … we wanted to detect these ranking drops when they’re on the verge of leaving page 1, and in doing so, restore traffic we were “at risk” of losing. And we wanted to do this automatically, for dozens of blog posts at a time. The “At Risk” Traffic Tool The way the At Risk Tool works is actually somewhat simple. We thought of it in three parts: Where do we get our input data? How do we clean it? What are the outputs of that data that allow us to make better decisions when optimizing content? First, where do we get the data? 1. Keyword Data from SEMRush What we wanted was keyword research data on a property level. So we want to see all of the keywords that hubspot.com ranks for, particularly blog.hubspot.com, and all associated data that corresponds to those keywords. Some fields that are valuable to us are our current search engine ranking, our past search engine ranking, the monthly search volume of that keyword, and, potentially, the value (estimated with keyword difficulty, or CPC) of that keyword.

Predictive SEO: How HubSpot Saves Traffic We Haven’t Lost Yet Read More »